In this article

To Train or Not to Train? A Practical Guide to Customizing LLMs for Your Business

When customizing LLMs, the goal isn't to rebuild the foundation; it's to build your unique application on top of the strongest one available.

By Preetam Jinka

Co-founder and Chief Architect

Sep 10, 2025

4 min read

Many companies possess vast internal knowledge bases—documents, manuals, and books—and ask a reasonable question: "Why can't we feed all this to a model and train our own powerful LLM?"

This question points to two fundamental business needs: making AI models more capable in general and making them experts in a specific domain. While training a model from scratch seems like the ultimate solution, modern techniques like RAG and fine-tuning offer more practical, efficient paths for most businesses.

How an LLM Actually Learns

At its core, a Large Language Model is a sophisticated next-word predictor. It is trained on vast amounts of text to guess the next "token," which can be a word or part of a word.

Through billions of iterations and corrections on a massive scale, knowledge and reasoning abilities become embedded in the model's weights. This process is not about memorizing facts but about learning the statistical patterns that govern language and, by extension, the concepts they represent.

The popular "chain of thought" or "step-by-step" reasoning is an extension of this. The model learns that to arrive at the correct final answer, it must first predict the correct intermediate steps.

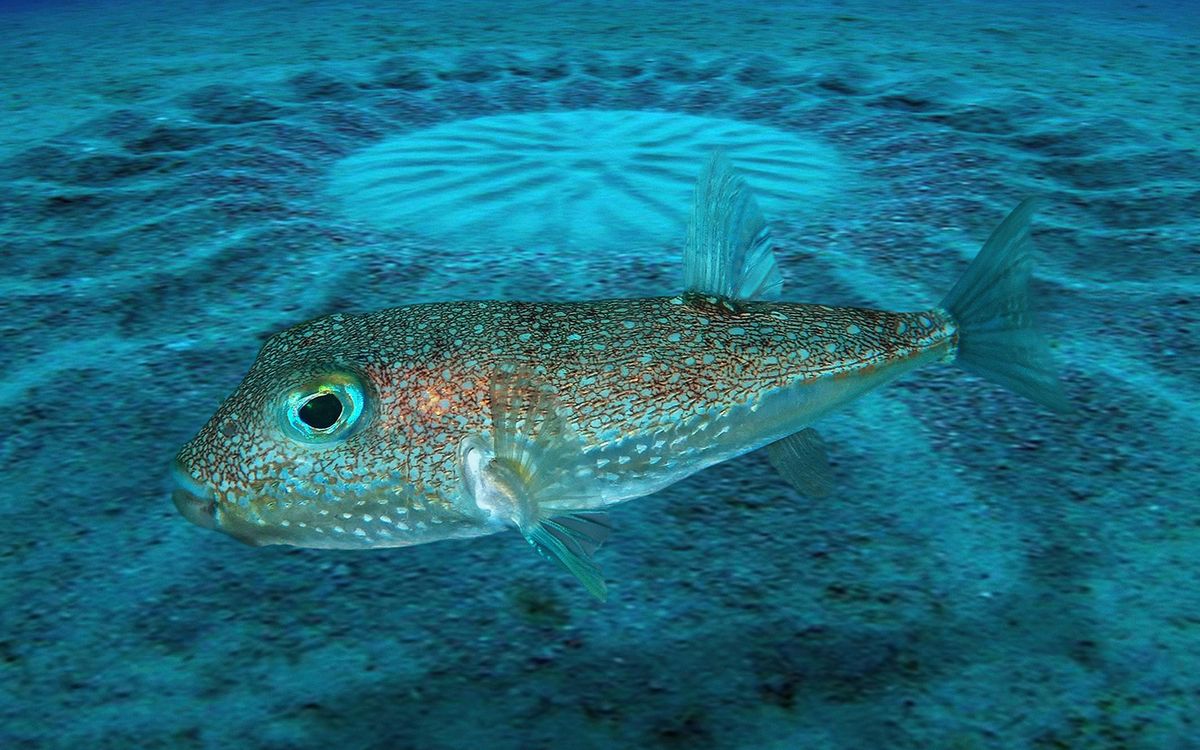

Consider the Japanese pufferfish, which creates intricate, geometric masterpieces on the seafloor to attract mates.

No one teaches the pufferfish how to do this; the complex knowledge is "trained" into its DNA and brain through millions of years of evolution. LLMs are similar. Their incredible abilities aren't explicitly programmed; they emerge from an intensive training process that embeds knowledge deep within their neural networks.

The Reality of Training from Scratch

Training a foundational model from scratch requires immense resources. It demands thousands of specialized GPUs running for months, a massive and meticulously cleaned dataset measured in terabytes, and a dedicated team of specialist researchers and engineers.

Think of it this way: training a model from scratch is like giving a stranger a full four-year college education. It teaches them fundamental knowledge, critical thinking, and how to write. It’s foundational but incredibly expensive and time-consuming. You wouldn't do this for every new hire.

The smarter start is to hire a candidate who already has a degree—a powerful foundation model from a provider like Google, OpenAI, or Anthropic.

Smarter Customization: Fine-Tuning, RAG, and Tools

Once you have your pre-trained model, you can specialize it using more targeted methods.

Fine-Tuning: The Weekend Workshop

Fine-tuning takes a pre-trained model and continues the training process on a smaller, specialized dataset. The goal is not to teach it new knowledge but to adjust its style, tone, or behavior.

There's a catch. You still need very high-quality data, and it can be expensive to deploy. Fine-tuned models often require dedicated hosting, which carries a premium over pay-per-request APIs. This method can also lock you into a specific model version. Like forking a programming language, you become responsible for its maintenance and miss out on updates to the base model.

RAG: The Employee Handbook

Retrieval-Augmented Generation (RAG) doesn't change the model's weights. Instead, it gives the model access to a knowledge base—your documents, databases, or internal sites—and allows it to pull in relevant, real-time information to answer a question.

Fine-tuning changes the model's static knowledge. RAG gives it dynamic, up-to-the-minute information. This is how you teach a model about your business data.

Tool Use: The Computer

You can also give the model the ability to use external tools, such as calculators, search engines, or your company's APIs. This allows it to perform actions and retrieve information beyond its internal knowledge.

The foundation model is the educated hire. RAG is their employee handbook. Tool Use is their computer. This combination creates a capable, knowledgeable, and effective AI assistant.

When Should You Train or Fine-Tune?

This doesn't mean training is never the answer. It makes sense in a few specific scenarios.

- To Change Core Behavior: When you need the model to adopt a very specific persona, style, or output format that can't be achieved through prompting or RAG—for instance, making it speak exclusively in iambic pentameter.

- For Highly Specialized Domains: In niche fields like specialized legal or medical domains, the vocabulary and concepts may be completely alien to general models, warranting custom training.

- For Extreme Security/Regulatory Needs: When data absolutely cannot leave your environment, requiring a fully self-hosted, air-gapped model is the only option.

Build on the Shoulders of Giants

For 99% of use cases, the path to a domain-aware AI is not to train from scratch.

The winning formula is to start with a state-of-the-art foundation model, add knowledge and context with RAG, and give it capabilities with Tool Use. Consider fine-tuning only for those rare and specific behavioral changes you can't achieve otherwise.

The goal isn't to rebuild the foundation; it's to build your unique application on top of the strongest one available.